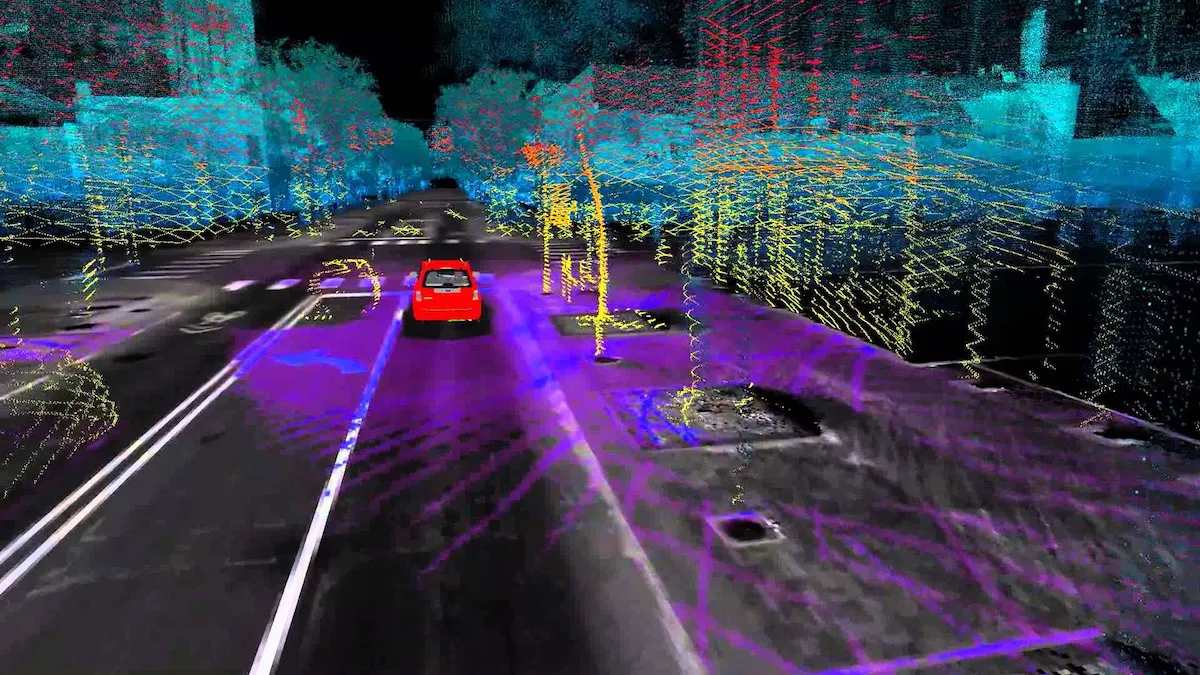

Many of us use a GPS or Google maps to show us how to get from one point to another. But how do autonomous vehicles navigate unmapped areas with no driver at the wheel? They use SLAM.

Simultaneous Localisation and Mapping (SLAM), introduced in the 1980s, is a method that allows autonomous vehicles to continuously build a map of its surroundings while simultaneously recognising its own position within the map. Some of SLAM’s applications include path-planning and dynamic obstacle avoidance. This method is useful for autonomous vehicles operating in uncharted terrain.

A good example of SLAM application can be found in home robot vacuum cleaners. When it first boots up, the robot scans the environment through its in-built sensors and creates a map of its surroundings as it moves. At the same time, it uses data from its cameras, accelerometer and other odometry sensors to determine its current location and optimal cleaning path. This prevents it from cleaning the same spot multiple times.

In general, SLAM consists of two components: First, the front-end processing, which is largely based on sensors such as cameras and Light Detection And Ranging (LiDAR). Second, the application-dependent back-end processing, such as image processing, motion analysis, and machine learning to optimise localisation etc.

With the improvements in computer processing speed, greater availability of cheap and reliable sensors, and higher interest from the autonomous industry, SLAM has matured to be a practical solution in the growing fields of robotics and autonomous vehicles.

Currently in HTX, SLAM is one of the algorithms used in our ground robots, such as the M.A.T.A.R. and ROVER-X. The next frontier for SLAM could be the air, as researchers are finding ways to deploy SLAM to Unmanned Aerial Systems to help them fly autonomously.

ROVER-X uses SLAM to navigate around a chemical plant. (Photo credit: HTX)

Top image from Dr Ryan Eustice, University of Michigan.