Forget about the humanoid police droids in the 2015 movie Chappie that can conduct assault missions and protect their fellow human police partners. In this present reality, our robots are still learning how to run smoothly.

You might have caught wind of the Optimus robot at the Tesla demonstration event which made headlines around the world. At first glance, the robot did appear to be a futuristic marvel that represented a quantum leap in innovation. But it didn’t take too long before the media found out that much of what the robot was doing – dancing and pouring cocktails – was controlled remotely by humans.

So, no. We’re not about to get robotic home assistants the likes of those depicted in the 2004 blockbuster movie I, Robot.

Humanoid police droids like Chappie will be a reality, but probably not in the near future. (Photo: Columbia Pictures)

Humanoid police droids like Chappie will be a reality, but probably not in the near future. (Photo: Columbia Pictures)

Before I list down the current obstacles standing in the way of this happening, it would be useful to first understand the origins and evolution of robots.

A science fiction beginning

Contrary to what many people think, robots aren’t a modern invention.

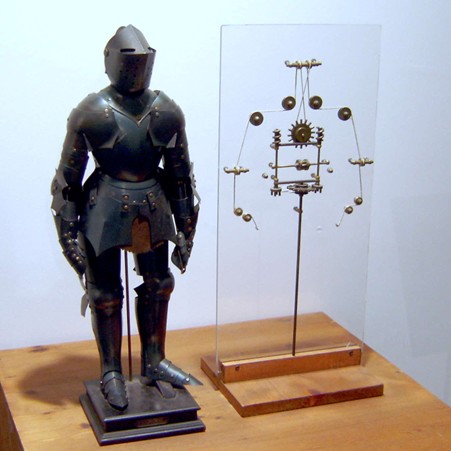

Since ancient times, humans have explored the idea of mechanical systems that work independent of human intervention. Known as an “automaton” or “automata”, the words come from ancient Greek for “acting of one’s own will”. One of the earliest records of an automaton comes from 400 BC – a steam-powered flying pigeon [1] created by Archytas, who was later regarded as the founder of mathematical mechanics. Another famous automaton came in the form of an armoured Germanic knight [2] designed by Leonardo Da Vinci.

Until the beginning of the Twentieth Century, the word “robot” was not found in English literature. It was first introduced to the world in 1920 by Czech writer, Karel Capek, in his science-fiction play titled, Rossum’s Universal Robots (R.U.R.). In the play, the term “robota” (robot) refers to artificial slaves capable to thinking for themselves, which humans created from synthetic organic matter. The play concluded with the robots revolting and annihilating the human race.

Capek’s creation was so influential and successful in Europe and North America [3] at that time that the word “robot” began to displace “automaton” in languages around the world.

In today’s context, the robots that Capek had in mind would be more accurately known as androids since they were neither metallic nor mechanical.

Leonardo Da Vinci’s mechanical knight. (Photo: Erik Möller)

Leonardo Da Vinci’s mechanical knight. (Photo: Erik Möller)

Asimov was the man who proposed the Three Laws of Robotics, which essentially states that artificial intelligent humans must not harm humans (First Law), must obey human orders (Second Law) and must protect its own existence as long as it does not conflict with the First and Second Laws.

Interestingly, Asimov’s laws spearheaded the study of robot and machine ethics, which are subsets of today’s studies in artificial intelligence (AI) ethics [4].

20th Century robots

Robots would not exist in the way they do today without the advancement of computers. In fact, the robots both Capek and Asimov wrote about centered around artificially intelligent humans. An early example of an intelligent robot was Elise – a wheel-based mobile robot built in 1948 by cybernetics researcher William Grey Walter that could seek out a light source and a recharging station. Walter’s concept of robots relied very much on analogue electronics and simulation of brain processes.

Elise is known as the first robot in history that was programmed to “think” the way biological brains do and meant to have free will [5]. This robot was considered the world’s first autonomous mobile robot (AMR), and such robots were later widely used across industries.

In the 1949 book Giant Brains, Or Machines That Think, the author, a computer scientist named Edmund Callis Berkley, suggested that computers models built after 1940 were comparable to human brains [6]. He rejected the narrow views that computers should only produce information and put forward the idea of a “robot machine that both thinks and acts”.

In 1954, Unimate, the world’s first digital programmable robotic arm, was born [7]. Invented by George Devol, it was a polar-coordinate arm sitting on a linear track controlled by magnetic signals on a revolving drum.

Unimate, the world’s first digital programmable robotic arm. (Photo: The Henry Ford)

Unimate, the world’s first digital programmable robotic arm. (Photo: The Henry Ford)

The AI movement that spurred new research in robotics at universities in the United States, Europe, and Japan started in the 1960s following the publishing of Computer Machinery and Intelligence - which proposed a test of machine intelligence called The Imitation Game - by the famed Alan Turing in 1950 and John McCarthy’s famous workshop on AI at Dartmouth in 1956.

By 1973, Ishiro Kato, a pioneer in humanoid robotics, built the world’s first full-scale anthropomorphic robot (Wabot) at Waseda University [8]. The Wabot sparked interest in social robotics among the Japanese robotics community and this subsequently led to the birth of some notable robots such as the 1999 Sony Artificial Intelligence RoBOt (AIBO), which was a robotic pet dog designed to “learn” by interacting with its environment, owners and other AIBOs, as well as Honda’s Advanced Step in Innovative Mobility (ASIMO) humanoid robot. Launched in 2000, the ASIMO humanoid robot could walk, climb stairs, recognise faces and gestures, and change its direction after detecting hazards.

Although Walter’s invention was considered the world’s first AMR, his invention did not enter the mass market until Engelberger saw a potential use for it in the medical field and introduced HelpMate, a mobile robot hospital courier, which became commercially available in 1988. Within a decade, over 100 hospitals worldwide operated HelpMates [9] that were purchased or rented from Engelberger's company, which was later renamed HelpMate Robotics Inc.

HelpMate robots. (Photo: Wikimedia Commons)

HelpMate robots. (Photo: Wikimedia Commons)

Before the end of 20th century, AGVs are widely used in factories, warehouses and hospitals around the world.

Modern era robots

Although robots were well represented by the industrial robots and AGVs in the manufacturing industries by the end of the 20th century, other sectors of the economy faced challenges in adopting this technology. One of the main reasons is that these big, heavy, expensive and difficult to program (due to proprietary programming language) industrial robots were not suitable for small and medium-sized enterprises (SMEs).

With the aim of making robotics technology more accessible to SMEs, Esben Østergaard, Kasper Støy, and Kristian Kassow founded Universal Robot in 2003 and marketed the world’s first collaborative robot arms (cobots) in 2008. These robots could be safely operated alongside human workers, thus eliminating the need for safety cages or fencing. They were also more affordable than the big industrial ones and were comparatively easier to maintain and, most importantly, easy to program [11].

Cobots became an instant success as this technology was able to penetrate other economy sectors that industrial robots could not. Today, cobots are widely used in many industries and academia, including in the service industry where they serve as robot baristas and robot chefs.

Apart from creating the cobot, Østergaard was also responsible for the recent comeback of AMRs, which are now displacing AGVs in warehouses and factories around the world. Unlike AGVs, AMRs do not rely on fixed infrastructure like magnetic guide tapes, and hence offer users more flexibility.

Another robotic technology that has made a big leap in evolution is the quadrupedal robot. Made famous by Boston Dynamics, a robotics company founded by Marc Raibert, who spun off it from MIT in 1992, the quadrupedal robot is seen as an autonomous legged robot that can traverse any terrain.

Where we are now

Today, robots play a key role in many sectors within society. In areas like manufacturing and warehousing, the use of robots has increased efficiency and productivity and allowed human workers to focus on other more pressing tasks.

Robots, too, can be found in the realm of homeland security. For example, HTX and the Singapore Police Force (SPF) have been working closely to develop an autonomous patrol robot called the Multi-purpose All Terrain Autonomous Robot (M.A.T.A.R.) as well as another similar robot called XAVIER, as early as 2016.

The envisaged operations of these autonomous robots include conducting patrols along pre-defined routes at public spaces or along the perimeters of key installations. By carrying payloads such as 360-degree field of vision cameras, sensors, speakers, blinkers and sirens, these robots act as additional pairs of eyes on the ground to complement the work of our police officers. During the Covid-19 pandemic in 2020, these robots were put to good use when they performed patrols in a foreign worker dormitory in place of human officers, thus reducing the latter’s exposure to the virus. The SPF has even announced its plans to deploy such patrol robots progressively across Singapore.

But as useful as these robots are, they still lack the ability to react - a key capability that provides a clear edge for any patrol robot over fixed surveillance cameras. Having an autonomous robot that can chase down a perpetrator in public spaces or intruders who trespass would be ideal.

Xponents posing with the M.A.T.A.R. (Photo: SPF)

Xponents posing with the M.A.T.A.R. (Photo: SPF)

Secondly, robots still lack the ability to interact well with their surrounding environment. Today, our structured environments (i.e. buildings, stairs, lifts, slopes, roads, pathways and corridors) are constructed according to a human’s mobility and ergonomic needs. In addition, human biomechanics allows us to navigate unstructured environments such as undulating and uneven terrains. To replicate such biomechanics in robots is one major challenge that many are still attempting to tackle.

In recent years, legged quadruped robots have sparked the interest of many sectors because they boast greater mobility compared to conventional wheeled robots, but even such robots pale in comparison to humans when it comes to manoeuvrability.

And then there’s the issue of touch. Robots simply cannot grasp an object like how a human does because there’s a host of computational, sensory and mechanical challenges that have yet to be tackled.

Imagine opening a sachet of chill sauce and squeeing with just enough force so that the contents are spread evenly over a hot dog. Sounds simple? That’s due to our sense of touch - behind which is a complex nerve system that detects pressure, pain, temperature and vibrations - and our psychomotor skills, which have been honed over many years through learning from data that come in the form of repeated actions and mistakes.

In contrast, the robots of today can perhaps be compared to us when we are infants with yet-to-be developed brains , muscles and senses.

So, while we can say that humanity has come a long way in the development of robotics, it can also be argued that we’re still at the nascent stage even though centuries have passed since Leonardo Da Vinci created his automatons.

But given the rate at which technology is advancing today, I believe it won’t take centuries before we can create robots capable of performing the complex actions humans can.

While the current generation of roboticists are pursuing self-programming or “self-coding” robots (made possible by today’s advancement of AI models, such as Large multimodal models and eventually end-to-end robotics foundation models), perhaps the roboticists of tomorrow will likely focus on finding ways to hardcode Asimov’s Three Laws of Robotics in our own creations.

Why? To ensure that the ending to Capek’s play doesn’t happen.

Let’s just say we all know what might happen if we don’t take measures to control the robots we create. (Photo: Orion Pictures)

Let’s just say we all know what might happen if we don’t take measures to control the robots we create. (Photo: Orion Pictures)

Dr Daniel Teo is the Director of HTX’s Robotics, Automation and Unmanned Systems Centre of Expertise. Besides robotics, a field in which he has more than 20 years of experience, Daniel is also interested in the fields of Mechatronics, Control, Physics, and Embodied AI. A holder of a PhD in Robotics, Daniel has over the course of his career been granted four patents and published more than 70 peer-reviewed papers and three book chapters. He was appointed as associate editor and technical reviewer of several top-tier conferences and peer-reviewed journals. A recipient of the IECON Best Paper Award in 2013 and the R&D 100 Award in 2014, Daniel represents HTX as a technical member of the Robotics & Automation Technical Committee (RATC), which develops and reviews safety standards for robotics and automation (RA) both at national and international levels.

References

[1] https://www.ancient-origins.net/history-famous-people/steam-powered-pigeon-002179

[2] https://www.history.com/news/7-early-robots-and-automatons

[3] Burien, Jarka M. (2007) "Čapek, Karel" in Gabrielle H. Cody, Evert Sprinchorn (eds.) The Columbia Encyclopedia of Modern Drama, Volume One. New York: Columbia University Press. pp. 224–225. ISBN 0231144229

[4]

https://en.wikipedia.org/wiki/Ethics_of_artificial_intelligence

[5] https://gizmodo.com/the-very-first-robot-brains-were-made-of-old-alarm-cl-5890771

[6] https://monoskop.org/images/b/bc/Berkeley_Edmund_Callis_Giant_Brains_or_Machines_That_Think.pdf

[7]

https://www.invent.org/inductees/george-devol

[8] https://robotsguide.com/robots/wabot

[9] https://spinoff.nasa.gov/spinoff2003/hm_4.html

[10] https://www.fredagv.com/blog/the-past-present-and-future-of-agvs

[11] https://www.therobotreport.com/cobot-pioneer-esebn-ostergaard-reinvest-robotics/